Amazon and NVIDIA: Pioneering the Future of Generative AI – A Deep Dive into Their Expanded Collaboration

In the ever-evolving landscape of technology, two titans – Amazon Web Services (AWS) and NVIDIA – have recently announced a significant expansion of their strategic collaboration. This development, unveiled at AWS re:Invent, is more than just a routine partnership update. It represents a pivotal moment in the advancement of generative artificial intelligence (AI), with both companies pooling their resources and expertise to unlock new potentials in this field.

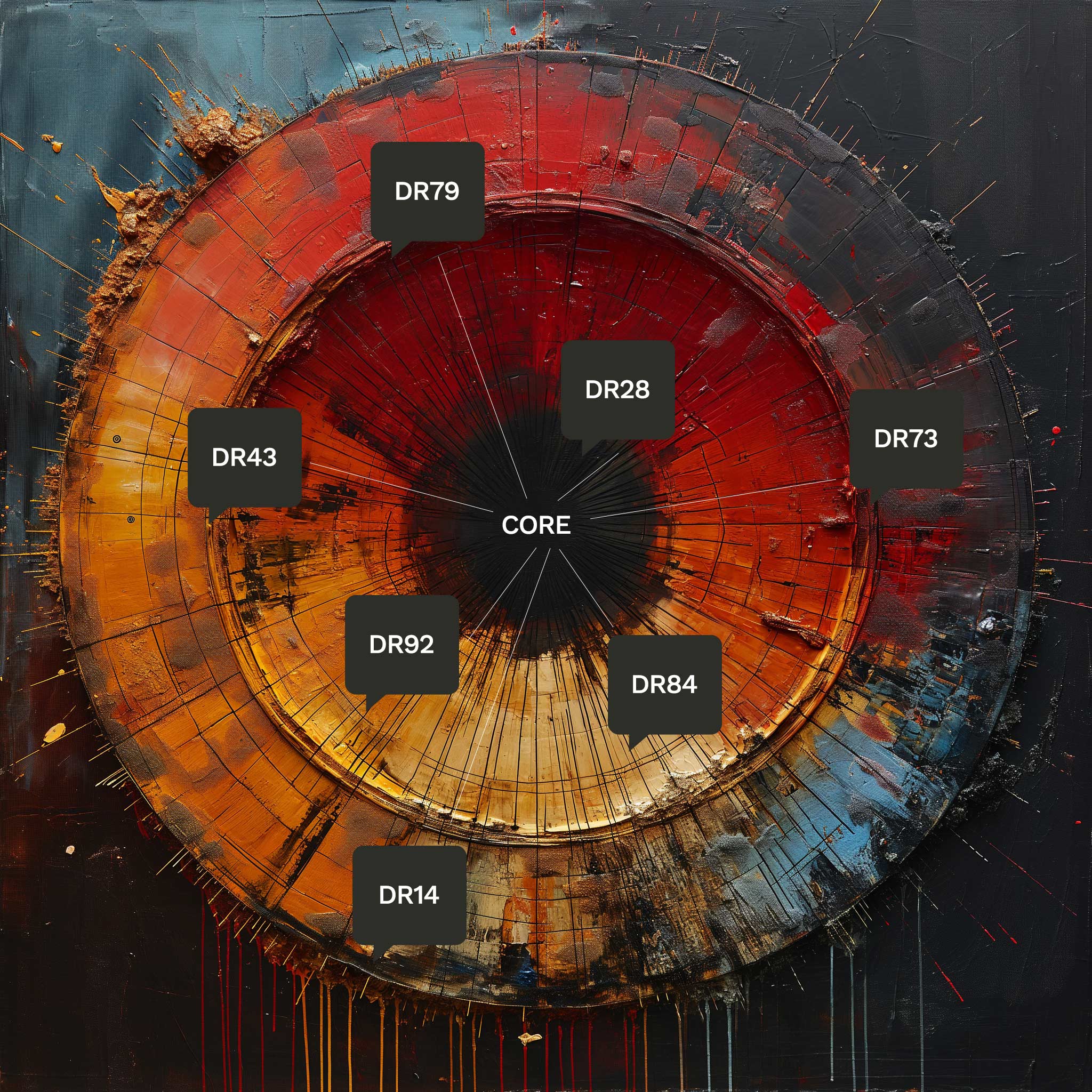

The Essence of the Collaboration

At its core, this partnership is about leveraging the strengths of both companies to create a powerhouse of AI innovation. NVIDIA, renowned for its cutting-edge GPU technology, brings to the table its latest multi-node systems, which include next-generation GPUs, CPUs, and AI software. AWS complements this with its robust cloud infrastructure, featuring advanced virtualization through the Nitro System, Elastic Fabric Adapter (EFA) interconnect for high-performance networking, and UltraCluster scalability, which is essential for handling the extensive computational requirements of generative AI.

Introducing the NVIDIA GH200 Grace Hopper Superchips

A centerpiece of this collaboration is the introduction of NVIDIA’s GH200 Grace Hopper Superchips to the AWS cloud. This move makes AWS the first cloud provider to offer these advanced superchips, which are designed for multi-node NVLink technology. The NVIDIA GH200 NVL32 multi-node platform is particularly noteworthy. It connects 32 Grace Hopper Superchips, leveraging NVIDIA’s NVLink and NVSwitch technologies to create a single powerful instance. This platform will be accessible on Amazon EC2 instances, benefiting from AWS’s powerful networking, advanced virtualization, and hyper-scale clustering. It enables users to scale their AI projects to thousands of GH200 Superchips, providing a level of performance akin to supercomputers.

NVIDIA DGX Cloud Hosted on AWS

Another significant aspect of this collaboration is the hosting of NVIDIA’s AI-training-as-a-service, DGX Cloud, on AWS. This service, which will now feature the GH200 NVL32, marks a leap forward in the training of generative AI and large language models. It provides developers with the largest shared memory in a single instance, crucial for handling the complex and data-intensive tasks of AI training.

Project Ceiba: A Supercomputer Vision

AWS and NVIDIA’s joint efforts extend into an ambitious project named Ceiba. The goal here is to design the world’s fastest GPU-powered AI supercomputer. This supercomputer, featuring 16,384 NVIDIA GH200 Superchips, is expected to achieve a processing capability of 65 exaflops. It’s a venture that illustrates the scale and ambition of this collaboration, aiming to dramatically advance the capabilities of AI research and development.

The Introduction of New Amazon EC2 Instances

To accommodate various AI and high-performance computing (HPC) workloads, AWS is rolling out three new Amazon EC2 instances. These include the P5e instances powered by NVIDIA H200 Tensor Core GPUs, designed for large-scale generative AI and HPC workloads, and the G6 and G6e instances powered by NVIDIA L4 and L40S GPUs, respectively, suitable for a wide array of applications ranging from AI fine-tuning to graphics and video workloads. The G6e instances are particularly tailored for developing 3D workflows, digital twins, and other applications using NVIDIA Omniverse.

Beyond Hardware: Software Innovations

Hardware is just one part of the equation. NVIDIA has also announced the introduction of innovative software on AWS to boost generative AI development. This includes the NeMo Retriever microservice for creating accurate chatbots and summarization tools, and BioNeMo, which aims to expedite drug discovery for pharmaceutical companies. These software solutions highlight the broader impact of the collaboration, extending beyond mere computational power to practical applications in various sectors.

Understanding the Broader Implications of the AWS-NVIDIA Alliance

As we continue to explore the expanded collaboration between Amazon Web Services (AWS) and NVIDIA, it’s crucial to understand the broader context and potential impact of this alliance. This partnership isn’t just about technological advancements; it’s about shaping the future of how businesses, developers, and consumers interact with and leverage AI technologies.

Democratizing AI through Accessibility and Scalability

One of the most significant implications of this collaboration is the democratization of AI. By integrating NVIDIA’s advanced GPU technology with AWS’s cloud infrastructure, this partnership lowers the entry barriers for businesses and developers looking to explore and innovate in the realm of generative AI. The availability of NVIDIA GH200 Grace Hopper Superchips and the hosting of NVIDIA DGX Cloud on AWS means that even smaller entities can access supercomputer-class performance, a privilege previously reserved for well-funded corporations and research institutions.

Fueling Innovation across Industries

The potential applications of this collaboration are vast and varied. From accelerating drug discovery through BioNeMo to enhancing warehouse optimization using NVIDIA’s Omniverse platform, the partnership is poised to drive innovation across multiple sectors. Project Ceiba, the ambitious plan to create the world’s fastest GPU-powered AI supercomputer, exemplifies the scale of innovation this collaboration aims to achieve. This supercomputer could revolutionize fields like digital biology, robotics, self-driving cars, and climate prediction, offering unprecedented computational capabilities.

Enhancing Large Language Models and Generative AI

A key focus of this collaboration is on large language models (LLMs) and generative AI. The new Amazon EC2 instances, featuring NVIDIA GPUs, are tailored for the demanding tasks of training and deploying LLMs and other AI models. This enhanced capability is crucial in an era where AI models are increasingly complex and data-intensive, requiring substantial computational resources to train and operate effectively.

The Future of Cloud Computing and AI

This collaboration between AWS and NVIDIA also signifies a milestone in the evolving relationship between cloud computing and AI. As AI becomes more central to business operations and daily life, cloud platforms like AWS play a pivotal role in providing the necessary infrastructure. This partnership ensures that AWS remains at the forefront of this shift, offering cutting-edge resources to its customers.

Challenges and Considerations

Despite the many positives, this collaboration also presents challenges. The increasing complexity and power of AI systems raise important questions about ethical AI development, data privacy, and security. Both AWS and NVIDIA will need to address these concerns as they continue to push the boundaries of AI capabilities.

Conclusion: A New Era of AI Innovation

In conclusion, the expanded collaboration between AWS and NVIDIA is more than a technological advancement; it’s a catalyst for a new era of AI innovation. By combining NVIDIA’s GPU prowess with AWS’s cloud infrastructure, this partnership has the potential to transform not only how AI technologies are developed and deployed but also how they impact our lives and work. It’s a bold step forward, promising to bring the power of AI to a broader audience and fueling innovation across a spectrum of industries and applications.